TECHNOLOGY | 05.06.2020

A brain-computer interface that “translates” our thoughts

Microsiervos

Much is being done to create brain-computer interfaces that can “read” our thoughts or, more precisely, the words or images that we focus on and want to get across at a given time. This would have extremely significant implications when it comes to streamlining the commands that we use to interact with all kinds of devices, including simple commands, message transmission or quick responses.

Now, a team of neuroscientists from the UCSF Neuroscience Center (USA) has published an article in Nature Neuroscience entitled “Machine translation of cortical activity to text with an encoder-decoder framework” on the topic. Behind these complicated technical terms lies something that can be explained more easily: What they have done is to use known techniques such as translating texts between different languages to try to “translate” brain signals into normal, everyday texts. In other words, the artificial intelligence that we use to translate German into English can also be used to translate the “language of thoughts” into English.

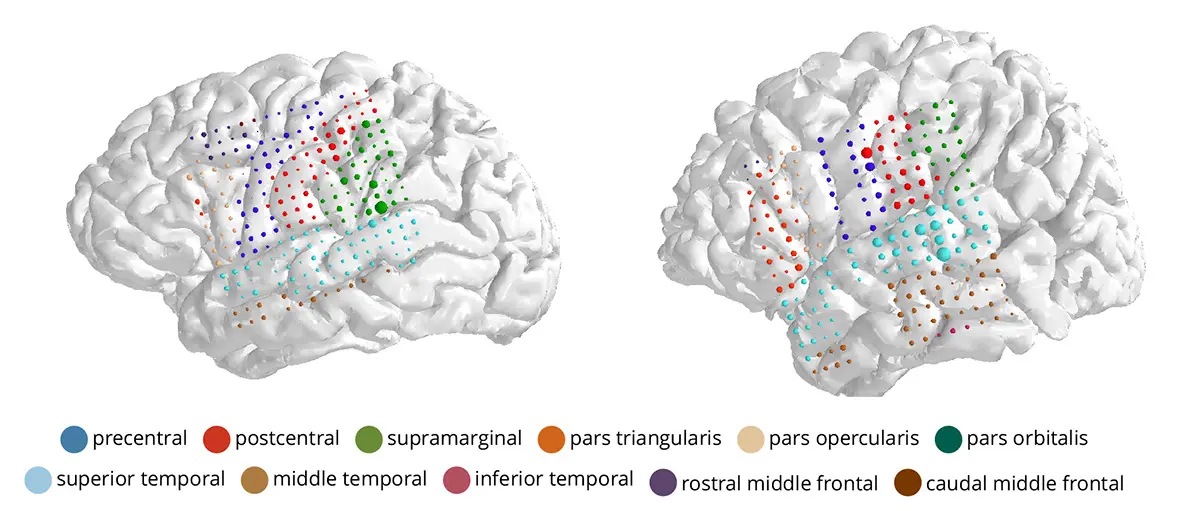

Unlike other comparable systems, this one uses an electrocorticogram that records brain signals using about 250 small electrodes implanted under the skull. Other similar, less invasive systems use a kind of swimming cap with cables coming out (this—or something akin to a headband or an eyeglasses frame—would be an ideal gadget for talking to computers or other machines such as cars in the future.) The system analyzes the activity of these signals for recognizable patterns using algorithms similar to those that analyze voices or images in a movie.

Until now, these signals were converted to text with somewhat lower accuracy and speed than voice-to-text conversion (which also uses techniques typical of artificial intelligence). The new system diverges from the traditional technique of identifying phonemes to recognize words and concentrates on whole sentences and identifying words, in the same way as translators of text between different languages. The fact that certain words are more statistically likely to appear together means recognizing them is easier.

Several women who already had electrode implants for monitoring epileptic seizures volunteered for the tests and were separated into groups. The signals, of course, varied from person to person and depended on where the electrodes were implanted. Training only took half an hour; it consisted of reading between 30 and 50 sentences with about 250 different words: “The child is turning four years old,” “several kids are in the room,” “four candles are lit on the cake” and similar sentences. A combination of sentences and drawings were also used in one of the groups. This provided a basis for the system to recognize which part of the sentences the keywords “child,” “cake,” “room,” and so on, belonged to.

The same algorithm was then fed with the electrocorticograms from reading other different sentences, to see how many words it could identify. The result showed an unusual level of accuracy, with an error rate of only 7 percent for half of the volunteers, compared to other similar systems where the error rate is 50 or 60 percent.

Another promising result of this research is that in addition to its reliability, the system was extremely flexible: Not everyone read the sentences correctly, sometimes there were pauses, sounds (“hmmm…”) and so on, but the system was still able to correctly recognize the keywords in their context. Likewise, not all of the women had electrodes implanted exactly in the same place, but the system and calculations performed by the algorithms were able to adapt what was learned (equivalents of phrases and words) from person to person. This would indicate that these learning algorithms may be able to “translate” the words that the person is thinking of not only accurately but also flexibly, almost in the same way that people are able to recognize other languages more or less independently of who is speaking to us.